-

The Fragmentation of Knowledge Work

Anthropic’s data shows AI isn’t replacing jobs yet—it’s fragmenting them. For programmers and analysts, the deeper risk may be the slow erosion of expertise

-

When Donald Knuth Lets an AI Do the Math

Knuth meets AI: Claude helped crack a combinatorial puzzle about Hamiltonian cycles that resisted neat constructions—prompting Knuth to reconsider generative AI.

-

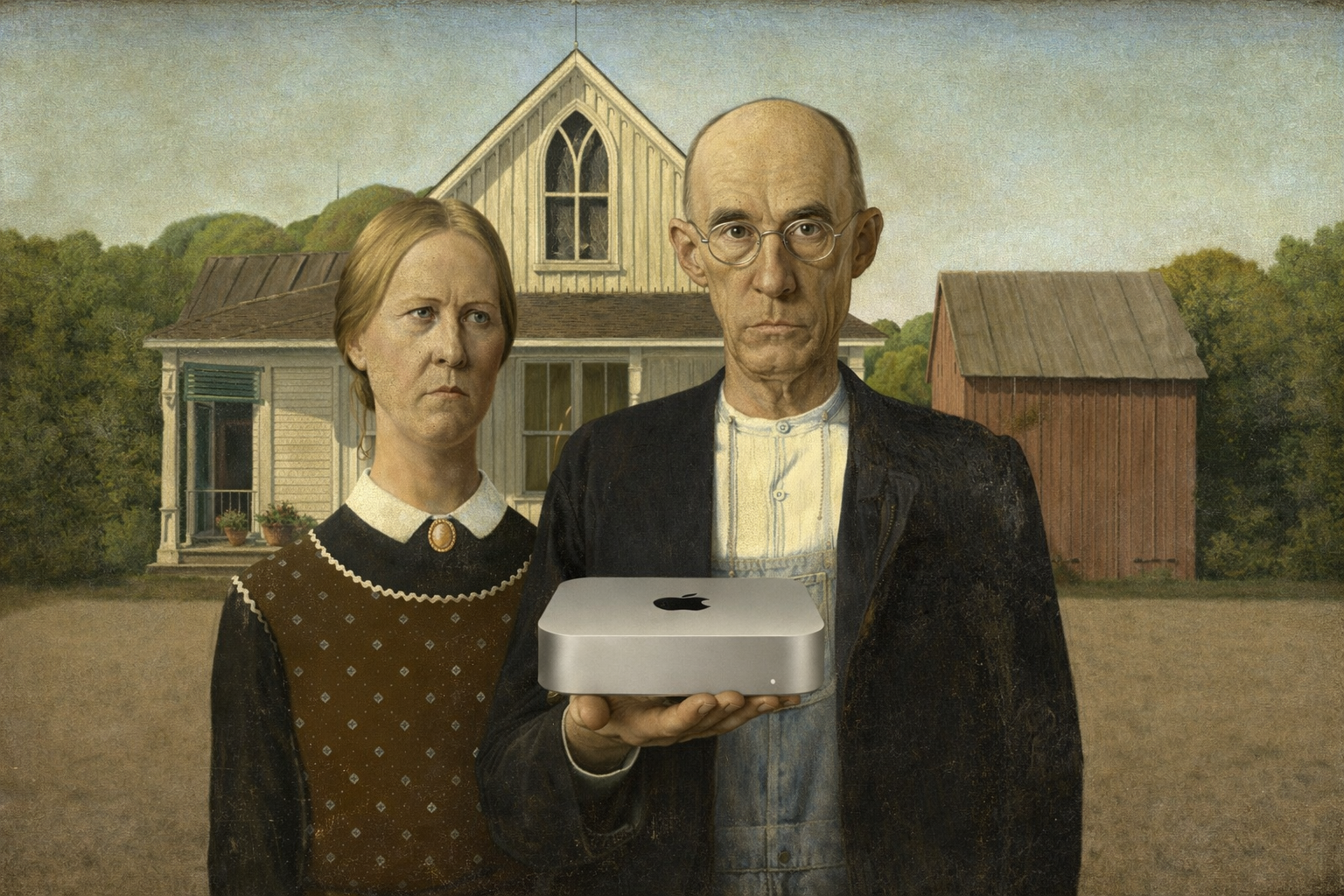

The ROM Listing Spirit Lives On in Apple Silicon

From TRS-80 ROM listings to reverse-engineered Neural Engines: Apple silicon is making home computers weird, intimate, and exciting again.

-

The Laptop Rule and the White-Collar Delusion

Shane Legg’s “Laptop Rule” cuts straight to the weak spot of modern white-collar work: if it lives entirely on a screen, AI can probably learn it.

-

The Hidden Cost of AI Speed: Why “More Output” Feels Like Burnout

AI boosts output but shifts the burden to human judgment. Here’s why that exhausts us—and practical ways to regain control.

gekko